How can assessment be enhanced through the use of digital tools?

This is a big question I was asked by a specific Norwegian higher education department and needs to be taken in stages.

What is the context?

This post relates to both individual courses and at institutional level.

The question was posed in the context of higher education in Norway where it is important to know that all courses should adhere to Universal Design for Learning (UDL) principles at the latest by 2020. This means that course materials and assessments should be presented in different formats, not just text, that are accessible to all. Furthermore, the department is adjusting its courses to follow:

Constructive Alignment This refers to the need for all aspects of a course to align with each other. Consider for example whether it is appropriate to have a traditional exam as the summative assessment form for a course that has been project-based since the two activities do not reinforce one another.

SOLO – structure of observed learning outcome, which is an alternative to Bloom’s taxonomy as a way of ensuring that courses, tasks and assessments include all levels of analysis from knowledge of unrelated facts, all the way up to being able to create new relationships between a set of facts and skills. Starting with learning outcomes is a form of backward engineering as advocated by Wiggins and McTighe (2005) and is a strategy for implementing constructive alignment.

Effective assessment

Assessment covers both formative and summative assessment. As digital tools become more powerful, there is a case for believing that using the right constellation of digital assessment tools means that there will no longer be any need for summative feedback since the tools will have been collecting information on abilities right from the start.

Assessment is a way of giving feedback to learners on how they are doing and what they need to do to become more skilled. Feedback has been identified by Hattie as one of the strategies that has the highest learning effect and is therefore extremely important.

Mark Barnes goes further and advocates not grading since research shows that, when offered feedback and a grade, students disregard the feedback. Barnes suggests an iterative process that he calls SE2R which is short for

- S Summarise what you see

- E Explain what learning this demonstrates and what is missing

- R Redirect learners to resources that will help to make their product better

- R Resubmit an improved version of the product

He recognises that what he calls narrative feedback at first appears very time-consuming but has suggestions for making it more manageable, noting in the end that students will still value brevity in teacher feedback! And it is worth noting that in real life, most tasks are iterative rather than a one-off chance to get it correct. This approach would combine well with the production of an e-portfolio of achievements (see below).

Cope calls this recursive feedback and explains in the video below how digital tools can help.

What is being assessed?

The SoLO approach suggests that both skills and knowledge are involved.

We might call the end point competence which implies that learners have demonstrated mastery.

For mastery to develop into competence suggests repeated experience.

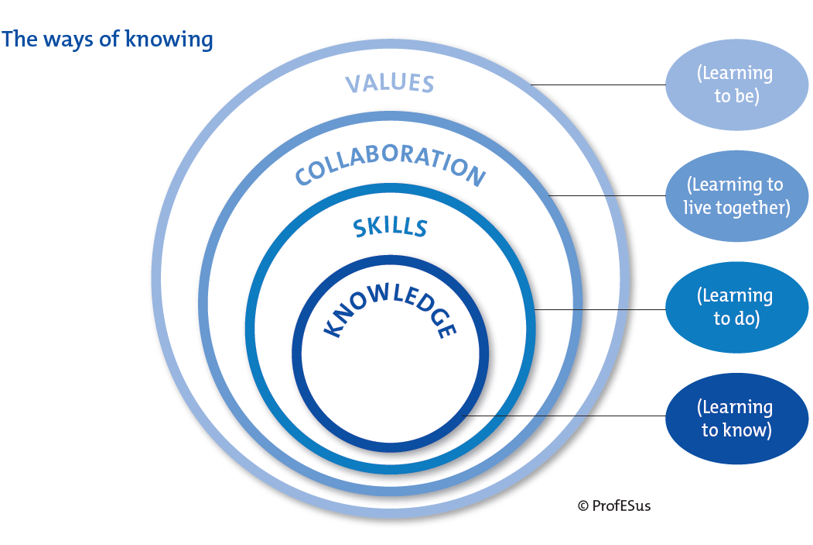

In the Prof E Sus project we proposed a model of competence as shown below:

Outside of the sustainability field it may seem odd that competency includes the layer of values, but as humans we always operate within a set of values whatever our job so this layer is justified. We only have to think about the questions being raised about the way in which companies such as Facebook and Cambridge Analytica used their expertise to see that the value layer is important.

Can we expect digital tools to be able to assess the values dimension of learning?

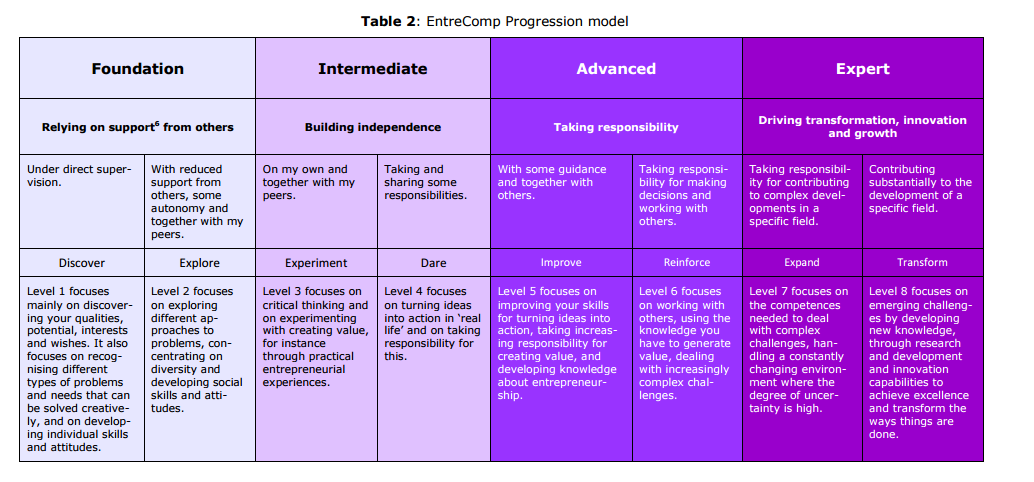

The European Union has been working extensively on competence description, such as in the fields of digital competence and entrepreurial skills, so we have some guidance on how the different levels of competence can be recognised.

The highest level, 8, implies longer periods of work experience and higher levels of responsibility so it is probably not realistic to expect this to apply at higher education level.

Micro-credentialing

If an institution has moved to competency and mastery, it may be apropriate to chunk the learning into smaller units that can then be rewarded through micro-credentials or badges, for example from Mozilla. This is not a large step from the already established practice of allocation of a certain number of ECTS points to each part of a course.

Who assesses?

Traditionally teachers do the assessing. But if constant feedback is what works to build competence then this could become very time-consuming. Digital tools may help teachers in this task, but until recently this has been possible mainly only at the lower knowledge levels of competence by using, for example, mutiple choice quizzes. But it is also important to remember that to foster self-directed learners who can go on to initiate and take responsibility for their work, then peer feedback and self-assessment should be nurtured as important skills too.

So what is being assessed is a multi-faceted set of skills and knowledge, part of which has been demonstrated in real-life, or near real-life, settings. And these skills need to be assessed by learners themselves, their peers as well as teachers. So how can digital tools help?

Digital tools

Cope and Kalantzis contend that lack of data is no longer the problem since, when students use a learning management system then their every keystroke is monitored. The challenge is to make effective use of the data being collected to document mastery and competence. What follows is a summary of the possibilities available so far.

Multiple choice

The multiple choice quiz (MCQ) was born of the desire to automate assessment and save the teacher’s time. It was probably one of the first ways of automating assessment. MCQs are easy to produce and hence widely used but are accused of failing to test the higher levels of the SOLO taxonomy or the softer layers of competency such as collaboration and values. There are guidelines for producing effective MCQs, even at higher levels of the SOLO taxonomy but MCQs cannot be used to assess the highest levels and they are often badly formulated. But see Peerwise below for exemplary use of MCQs.

Can we move on from MCQs?

It is now possible to assess free text input digitally for content rather than just grammar and style. This removes one of the disadvanatges of MCQs, that of the correct answer being visible. ETS for example has been implementing this globally for some time in their automated English TOEFL testing. Assessing longer essays is not yet possible, though at this point, it may be legitimate to question whether the ability to write longer essays is vocationally appropriate in most contexts.

The future: AI

Artificial Intelligence can help in different ways:

- Automated essay – open input – grading (ETS)

- ID checking

- Voice input processing

- Automated assessment creation eg Wildfire

- Learning analytics

- Adaptive learning eg Duolingo and other language learning apps

- Intelligent teaching assistant chat bots

- Can be used both to cheat and to combat cheating

- Ethics of each AI application need to be considered

The snippet below shows Donald Clark explaining some aspects of his Wildfire tool.

via ytCropper

Although concerning evaluation rather than summative or formative feedback, you can find out more about Hubert.ai a course feedback AI system here and try it out for yourself for free here.

Implications of UDL

Universal Design for Learning is not just about varying content input but also about varying student response channels. It is possible to design assessments that require or allow, audio, visual or video responses both in student tasks and in teacher feedback. As an example, Russell Stannard in the UK has written extensively about the benefits of giving students video feedback by using a tool such as Camtasia.

Moving towards the real world

Authenticity is another important requirement for effective assessment, for example according to JISC. Assessing authentic tasks helps to move students towards vocational competency that employers value. This can be ensured through project-based learning, work-based learning but also through gamification, simulations and virtual reality.

Self-assessment

A very common setting for video games is to try and beat your own previous score. In education this is known as ipsative assessment. It should be considered a vital life skill to be able to assess your own progress against agreed benchmarks. The implementation of Visible Thinking helps with this at younger ages while at higher levels eportfolios such as Mahara may be apropriate.

Peer feedback

The work of Erik Mazur shows that peer teaching is very effective when combined with a range of other strategies such as Just in Time Teaching and in-class clickers. all of these aproaches are much easier to implement with the appropriate digital tools.

Peergrade is a tool where students upload their work which is then randomly allocated to another student for peer feedback. Students follow rubrics to help them assess the work.

Peerwise allows students to construct multiple choice questions for their peers to try out. A good MCQ will include high quality options and feedback for correct and incorrect responses. Questions that students like and find useful are voted up and therefore there is a game element to the tool too.

Electronic Management of Assessment

These are digital tools that need to be implemented at organisation level and offer to take care of the implementation of high stakes assessment, often summative exams. This means that they include a variety of possible response types wrapped up in a secure environment that verifies ID and ensures that candidates only have access to the specified resources while taking the exam. The three main players are Flexite, Inspera and Wiseflow (mostly Nordic companies incidentally).

Implementing digital assessment

Key questions

- Do you have an overall assessment strategy?

- Is it visible to staff & students?

- How do your students behave in relation to the current assessment procedures?

- How will they react to changes? How will you prepare them?

- What is your vision of the end of course/module state? (Backward engineering)

- Deep learning (not easy to automate) versus surface learning?

Takeaways

- Establish your current baseline

- Start with the end result, the learning objectives

- Consider competency as a benchmark

- AI will infuse all aspects of HE including final assessments & UDL

- Exploit peer and self-assessment

- The Nordic region leads in EMA

See this post as an infographic here.

Sources

- Acenet infographic Competency Based Education

- Competency-based Education Network

- Conrad and Openo Assessment Strategies for Online Learning:

Engagement and Authenticity June 2018 http://www.aupress.ca/index.php/books/120279 - Bill Cope & Mary Kalantzis (2015) Sources of Evidence-of-Learning: Learning and assessment in the era of big data, Open Review of Educational Research, 2:1, 194-217, DOI: 10.1080/23265507.2015.1074869

- G. Gibbs and C. Simpson, “Conditions Under Which Assessment Supports Student Learning,” Learning and Teaching in Higher Education, V. 1, pp. 3-31, (2004), http://resources.glos.ac.uk/shareddata/dms/2B70988BBCD42A03949CB4F3CB78A516.pdf

- JISC (Joint Information Systems Committee) https://www.jisc.ac.uk/

- Moodle competency frameworks https://www.eclass4learning.com/moodle-competencies/

- Mustafa Severengiz et al. Influence of Gaming Elements on Summative Assessment in Engineering Education for Sustainable Manufacturing, Procedia Manufacturing 21 (2018) 429–437

- Peerwise https://peerwise.cs.auckland.ac.nz/docs/index.php

- Wieman, C ”Course Transformation Guide: A guide for instructors interested in transforming a course, and their instruction, to use research-based principles and improve student learning.”

- Wiggins, Grant, and McTighe, Jay. (2005) Understanding By Design